Guide to Amazon SageMaker vs Bedrock

This is a comprehensive guide for those looking for a comparison of Amazon SageMaker vs Bedrock in 2024.

In order to get a good perspective between SageMaker and Bedrock, it's important to know the context of how AWS positions it's AI & Machine Learning services. This will be covered first.

Then there will be details on the differences between Amazon SageMaker & Amazon Bedrock in terms of the foundation models accessible from each service, fine-tuning support, performance & pricing.

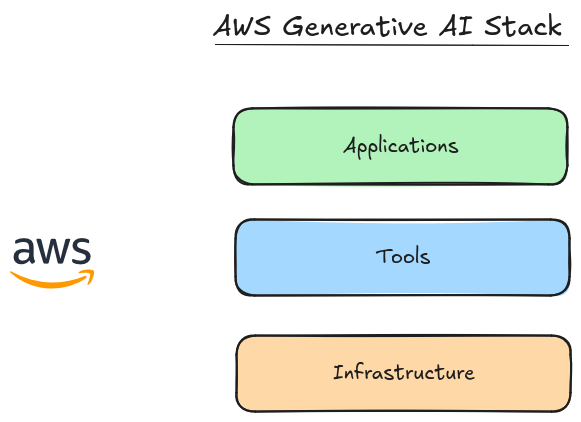

AWS references their Generative AI stack as the suite of services that help customers leverage, build and deploy large language models (LLMs).

AWS Generative AI stack

There are 3 layers to their stack. As you move down the layers, there's usually more complexity introduced to how you leverage the services.

Although lower levels of the stack are more complex, they allow more flexibility of how you configure, train and deploy LLM based applications.

- Applications are the services that leverage LLMs without requiring too much customer configuration or coding

- Tools are the services that help customers develop AI applications on AWS

- Infrastructure is the range of services that help customers train & deploy LLMs & Machine Learning systems

Where are Amazon SageMaker vs Bedrock in the AWS GenAI Stack?

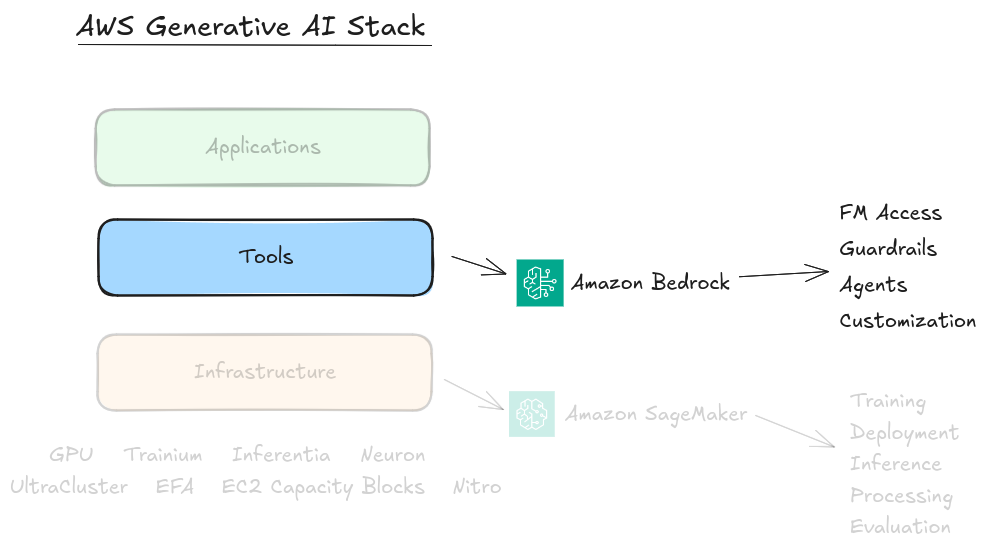

To understand the differences between Bedrock and SageMaker, let's consider their positioning in the AWS Generative AI stack.

Amazon Bedrock

Amazon Bedrock is in the tools layer of the AWS GenAI stack. Bedrock's functionality is geared towards helping customers build & develop custom AI applications leveraging LLMs and Foundation Models (FMs).

Here is the GenAI stack again, this time highlighting where Amazon Bedrock is in the AWS GenAI stack.

Amazon Bedrock provides features such as access to foundation models (such as Claude, Llama & Stable Diffusion); guardrails for controlling the outputs of the models, AI agents for orchestrating interactions between LLMs and certain customization tools to allow customers to tweak and fine-tune a limited set of models.

You can read this feature overview in the AWS Bedrock docs.

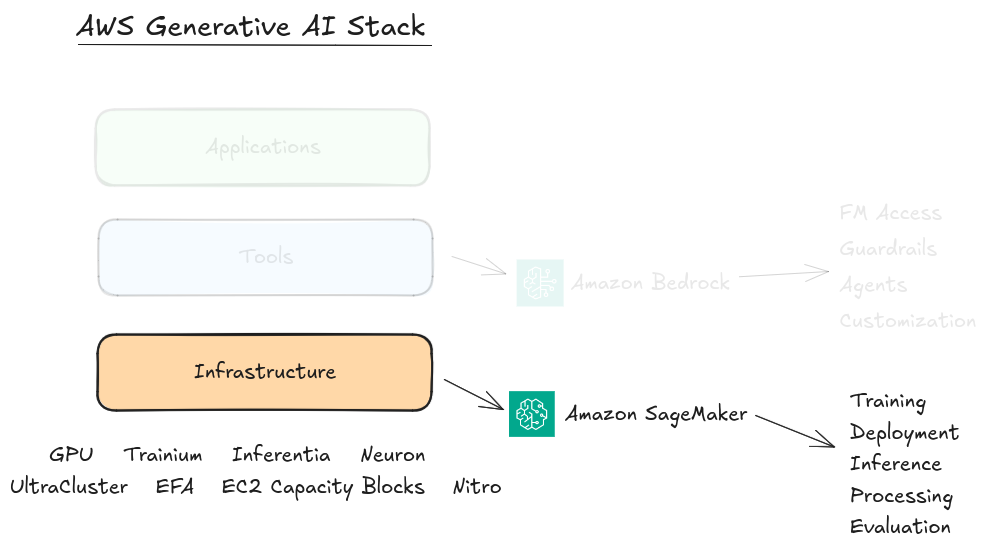

Amazon SageMaker

While Amazon Bedrock is in the tools layer of the stack, Amazon SageMaker is in the infrastructure layer of the stack. Amazon SageMakers capabilities are geared towards those customers who are looking to prepare data, train & deploy their own foundation models.

Here we've highlighted where Amazon SageMaker is in the stack.

Amazon SageMaker has been around since 2017 providing customers with a robust set of tools to build, deploy and test Machine Learning models at scale. SageMaker's capabilities are more generic compared to Bedrock so they can be tailored to training many types of models (not just FMs and LLMs).

SageMaker is more useful to those customers who are working lower in the AI stack as it provides a high level of customization that is not possible with Amazon Bedrock.

More on Amazon SageMaker

SageMaker is actually an umbrella term for many of the features, tools & capabilities you'd use to build machine learning and large language models. Tools like SageMaker Notebooks, Amazon Clarify, Ground Truth are all capabilities of SageMaker and are found in the SageMaker Console.

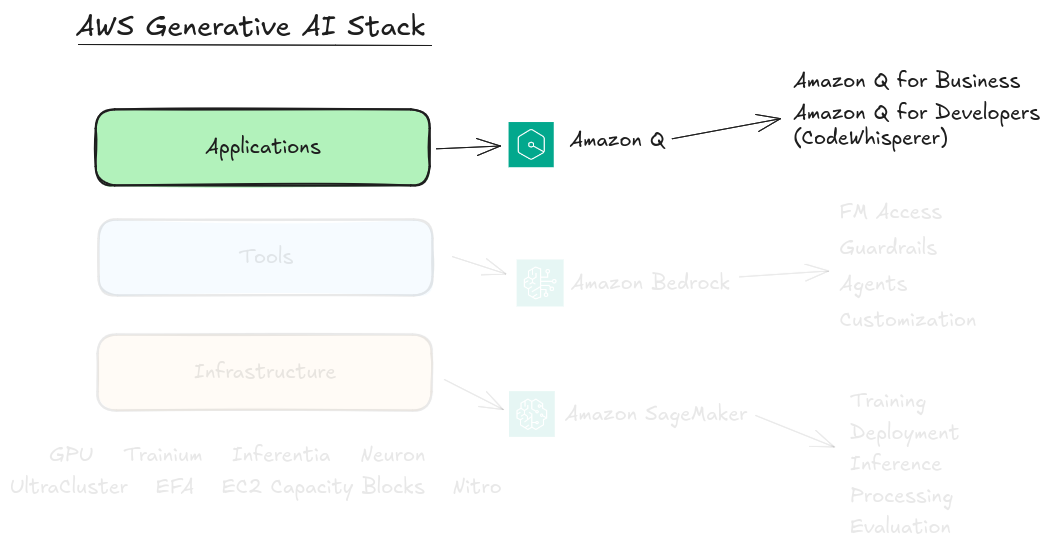

What's at the top of the GenAI Stack?

It's not vital to this topic but for clarity, the top layer of the AWS Generative AI stack contains services such as Amazon Q for Business & Amazon Q Developer. These are considered application level services as they abstract underlying language model implementation details from users. This has a trade-off of that they are less flexible.

Is there overlap between Amazon SageMaker vs Amazon Bedrock?

Amazon SageMaker is considered to be in the infrastructure layer of the stack and Amazon Bedrock is in the tools layer. Even with them in separate layers there is still overlap of functionality.

One area where there is overlap is when making use of Foundation LLMs. The next sections will consider the differences between the two when it comes to accessing foundation models.

Comparison - Foundation Model Access

Foundation Models are the baseline models that are provided by large model providers such as OpenAI, Anthropic, Meta, Mistral, Cohere and others. The idea of foundation models is that they usually require vast resources to train.

Foundation models are have general skills like being able to interpret actions from users. Foundation models are then fine-tuned to provide customized models that are particularly good for a specific domain such as healthcare, construction, finance etc.

Using SageMaker vs Bedrock for Foundation Model Access

Amazon Bedrock, being in the tools layer, allows customers to access foundation models directly without needing to provision virtual machines or infrastructure. This is one of it's core features.

Accessing Foundation Models with Amazon Bedrock

Amazon Bedrock provides a set of APIs that can be used to interact with a set of foundation models. It's important to note that Amazon Bedrock only supports FMs from a specific number of providers.

You can view the supported foundation models in the Amazon Bedrock docs.

The Amazon Bedrock APIs provide direct access to foundation models so this means you can use the AWS Console, AWS SDKs or AWS CLI to interact with the provided foundation models.

Before interacting with a model you'll need to enable the specific model for your account and region. There is a guide to enable model access in the Amazon Bedrock docs.

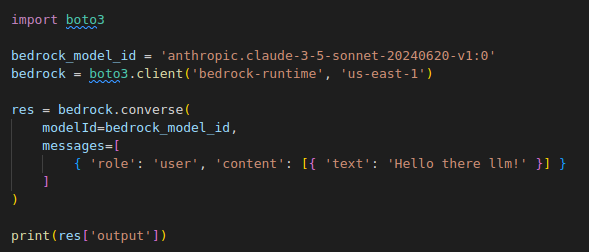

Here is an example of how you can access Claude 3.5 using Amazon Bedrock SDK in Python.

Note here the Amazon Bedrock converse API is being used. The converse API provides a consistent format to access LLMs. Bedrock allows access to multiple different LLM providers, different LLMs have different ways of being invoked.

If you're not using the Converse API you'll need to consider model inference parameters for different model providers in the Amazon Bedrock docs.

Custom Models

One newer feature of Amazon Bedrock is to allow you to import models into Amazon Bedrock from externally. This is useful for cases where you might have trained your model elsewhere and would like Amazon Bedrock to manage the scaling and access of the model.

As of the time of writing though, custom model import is in preview and only a few model architectures are supported.

Accessing Foundation Models with Amazon SageMaker

Accessing foundation models with SageMaker is not as easy as with Bedrock. This is because instead of just using the models, you are deploying the models first, and then accessing the models to perform inference.

SageMaker is more customizable so you will have more control of what & how models are deployed to the endpoints in your account. This allows you to implement your own logic to improve performance of an endpoint or to customize the model architecture.

One drawback to SageMaker is that you will only be able to access & deploy open source models. If you intend to use a closed model, like Claude for example, you'll only be able to do this via Amazon Bedrock.

When you deploy your own version of a model, you need to take ownership of how it is maintained, configured and updated. This usually requires more operational overhead for your team in the long term.

When you deploy a model, you are effectively deploying a SageMaker endpoint. SageMaker endpoints run in your account, so they will incur a cost based on SageMaker real-time inference pricing.

SageMake endpoints also do not natively have authentication built in. If you intend to provide your services via an API, you will need to implement an authentication / proxy layer between your consumers and the SageMaker endpoints.

You can read more details about SageMaker models for inference in the AWS docs.

What is SageMaker JumpStart?

Amazon SageMaker JumpStart is a quicker way to get started with LLMs deployed with Amazon SageMaker. JumpStart provides a wide variety of model providers (more than Amazon Bedrock). When you decide to use a model from SageMaker JumpStart, you'll be directed to Amazon SageMaker Studio to deploy the model.

What is SageMaker Studio?

Amazon SageMaker Studio is a web-based IDE for running ML workflows. It's one of the main interfaces you'll need to use if you want to make use of the models provided by SageMaker JumpStart.

Can you avoid SageMaker Studio? Amazon SageMaker Studio provides a nice user interface to help deploy the available foundation models but it's not required to deploy the models from JumpStart.

This article shows an example of how to use Amazon SageMaker JumpStart to deploy Llama3 via SageMaker Studio.

Alternatives to SageMaker Studio

Another popular way to access and deploy foundation models from SageMaker JumpStart is via the sagemaker SDK.

You can read the details about using pre-trained models in the python SDK for SageMaker docs

It's common to see customers using a IDE environment such as SageMaker Notebooks, Jupyter Hub or other Python based data science environments to manage the training and deployment of foundation models.

If terms like Jupyter notebooks aren't familiar to you then it's recommended to use Amazon Bedrock instead until you have a use case that requires you to learn how to use these tools.

Also if you just want to invoke models and do things like build Agents without worrying about hosting the models yourself then go with Amazon Bedrock.

Comparison - Fine-Tuning Support

One of the more technical aspects of developing your own custom models is fine-tuning. Fine tuning is supported in SageMaker because you have access to the underlying model. But you have to orchestrate and manage the training process through notebooks.

Fine-Tuning on Amazon SageMaker

The fine-tuning process in SageMaker requires triggering a job, the job will be managed by SageMaker and runs on instances that are managed by AWS. This orchestration of the training jobs across one or multiple nodes removes some heavy-lifting of configuring and running instances from your ML workflows.

Here is a video of how to do fine-tuning on Amazon SageMaker Studio using JumpStart.

Although SageMaker Training Jobs are managed by AWS, you can still customize the code to improve the implementation or performance of the model training process. This optimization usually requires more technical knowledge of how models are trained and developed.

You also have the option to choose which instance types the training happens on, giving you the option to use different GPU instances, Inferential or Trainium instances.

Here is an example of fine-tuning Llama3 using Amazon SageMaker JumpStart

Fine-Tuning on Amazon Bedrock

Amazon Bedrock supports model customization but only for a very limited set of models and regions. The aim of the customization feature is that you will be able to fine-tune foundation models without needing to even use Amazon SageMaker.

In both services you'll need to provide datasets that are suitable to the models you're fine-tuning to improve the performance of those models for a specific task.

See the list of supported regions and models for customization.

As there is such a limited set of models supported in Amazon Bedrock for model customization, the best tool to use if you're looking to fine-tune is Amazon SageMaker.

Comparison - Performance

Performance becomes important when you start to productionize your applications. You need to be able to scale to meet the demands of your business needs.

Performance in this section will mostly focus on throughput of the inference endpoints and not the performance of training which could be it's own topic.

The way LLMs are being used more recently require large amounts of text to be sent to the model for it to be able to respond accordingly.

The use-case, prompting techniques & size of the model can be as influential on the performance as the CPUs, GPUs and Memory assigned to the endpoint processing the requests.

Performance Considerations for Amazon Bedrock

With Bedrock, the scaling and management of the endpoints is managed by AWS. This is useful for environments where you have a smaller team and don't want to spend time managing and scaling infrastructure.

By default, the models in Amazon Bedrock make use of on-demand mode. On-demand mode is useful for when you have inconsistent usage requirements. The billing model also is supportive of this and you're only charged for the tokens in and tokens out of the endpoints (the throughput).

Bedrock also supports provisioned throughput for workloads with consistent usage and throughput requirements. With provisioned throughput you are charged per-hour for "model units" which provide for a specific level of throughput.

By using provisioned throughput you can increase the performance of your model but at the cost of having to pay more for the units.

See the provisioned throughput docs for more details about provisioned throughput in Amazon Bedrock.

Using a managed service like Amazon Bedrock usually means that over time the performance should improve as AWS makes improvements to the service.

Performance of Amazon SageMaker Endpoints

When you provision an LLM using SageMaker, you are effectively choosing to use a SageMaker endpoint. This means you can choose the type of hardware that your model runs on.

The performance of the a model in SageMaker depends on the implementation of the code in the endpoint. Tuning the performance of an endpoint can be challenging if you're new to LLM development & performance improvement.

AWS have provided tools like the AWS Inference Toolkit & HuggingFace provide their version of the SageMaker Hugging Face Inference Toolkit that can be used to optimize the inference performance of models.

The flexibility of how the model is deployed also extends to the hardware like with training, you're able to make use of Trainium or Inferentia2 instances and really tune the performance.

If deploying the model as a real-time endpoint with Amazon SageMaker, it's possible to improve the performance because the endpoint is deployed to instances and is always available to accept requests for inference. You may, however, need to also tweak your model code to see improvements to performance. Tweaking performance is not a trivial task.

SageMaker also has different provisioning modes such as batch and asynchronous. There is also serverless inference which does not support GPU instances and so limits the options for deploying models with SageMaker somewhat.

See here for the deploy model options for SageMaker.

What is the Neuron SDK

Neuron SDK is a set of tools that have been developed to help customers leverage Amazon custom silicon instances in production. Custom silicon include Trainium & Inferentia instances.

Most modern LLM development environments rely heavily on Nvidia GPUs for the training and inference processes. In some cases and model architectures it's possible to get performance improvements over GPUs using these custom machine learning chips. This often requires the support of aws machine learning experts.

Pricing Models

The last factor to consider is the pricing models for both Amazon SageMaker and Amazon Bedrock.

When comparing the two there is overlap because with Amazon Bedrock you can provision throughput which moves the pricing from on-demand to a fixed price per hour.

With Amazon SageMaker you can use Serverless Inference (with smaller, non GPU models) to host your models and get more of an on-demand pricing.

Pricing in Amazon Bedrock

Here are details of Amazon Bedrock Pricing.

The pricing is based on the model that you choose to use and then based on the input and output tokens for the model. A token comprises a few characters and refers to the basic unit of text that a model learns to understand the user input and prompt.

For certain features such as Bedrock Agents and Knowledge Bases you are not charged for the features but instead for the underlying usage of the Amazon Bedrock models.

When you start out building LLM enabled products, using token based, on-demand pricing can be useful because you don't incur costs when you are not calling the endpoints. If you need more throughput as you scale up you can move to provisioned throughput which has a cost per hour of usage over 1 or 3 months.

If you start to use a model extensively with high levels of throughput, then it can be cheaper to move your model to Amazon SageMaker. This assumes you're using a open source model.

Pricing in Amazon SageMaker

Here are details of Amazon SageMaker Pricing.

With SageMaker the cost calculations becomes more multi-dimensional. As mentioned above, when deploying LLMs or FMs to Amazon SageMaker you get to choose the hardware types and instance sizes for the SageMaker endpoints that you deploy.

SageMaker endpoints have the ability to scale up and down using auto scaling for real-time endpoints and so the number of instance factors into the price. The size & number of instances are charged on a rolling basis so it's better suited when you have budget for it or if you have a predictable consistent traffic pattern.

One consideration is that when you deploy a SageMaker endpoint, there's only a price for the resources that are provisioned and not the throughput. If you have a model optimized for high-throughput you could get a better price per token than via Bedrock.

If you have a high-throughput inference application, then doing the calculations to figure out the costs of bedrock vs SageMaker can reveal some gains. If your model is specifically tuned for Amazon custom silicon then you could also get a better price for inference by using those instance types. Once you've done the calculations you can consider reducing your monthly spend via ML Savings Plans.

As stated above, any gain would be for open source models only as the closed sourced models are only available via Bedrock APIs.

Final Notes on Amazon SageMaker vs Amazon Bedrock

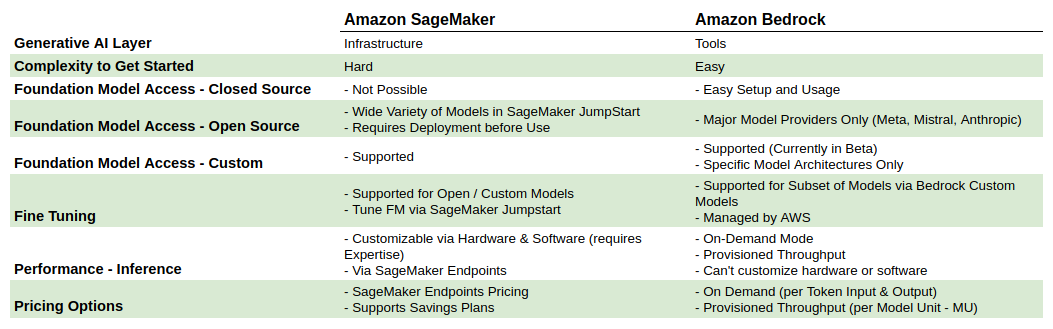

In summary, this article looked at the positioning of Amazon Bedrock and Amazon SageMaker in the AWS Generative AI stack. On comparison the following chart shows some of the trade-offs and benefits of SageMaker vs Bedrock from the discussion above.

Hopefully this article has helped guide you towards a better understanding of the AWS Generative AI stack and how to compare the differences between Amazon SageMaker and Amazon Bedrock.

Our team helps businesses and startups build and scale their infrastructure using cloud technologies. If you're looking for Cost Optimization on your existing workloads or need an Architecture Review of a proposed workload we are here to guide assist.

You can contact us for a more info.