Managing Rollout of Config Changes in Python using AWS AppConfig

Recently there's been coverage about how the rollout of a Crowdstrike update caused massive worldwide outages of Windows machines. This event points to the fragility of the global IT stack but it also has a number of operations experts re-thinking their deployment strategies to prevent such massive outages.

In today's fast-paced software development landscape, managing configuration changes and controlling application behavior are crucial. As applications grow in complexity, the ability to centrally manage and adjust features becomes a key factor in maintaining agility, reducing risks, and improving end-user experience. Effective configuration management allows teams to respond quickly to changing requirements, toggle features on or off, and roll out updates without disrupting the entire application.

This article outlines some best practices for managing application configuration. Near the end there is an example of creating a dynamic configuration class in Python that allows you to dynamically update the behavior of your apps in their different environments.

What is AWS AppConfig?

AWS AppConfig is a managed service that helps centrally manage application configuration & control the rollout of functionality and features to your applications. It has a number of useful features such as being able to automatically rollback changes if issues are detected & validated configuration before deployment.

Best Practice - Disconnect Deployment from Feature Release

For smaller companies, it's common to see a blur between deployment and feature releases. In this case, when you deploy a new feature, it's immediately live for any user to see. This is good if your product is small and you're in a period of rapid development of features but in the extreme case it can cause a hesitancy about deployment in general. You avoid deploying frequently because the feature is not ready yet.

In order to improve agility it's important to remove the connection between deployment and features. This is good for developers because not everything you deploy is a feature. There are bug fixes too. When everyone is merging into the same dev environment having a way to change how the application behaves in each environment means you can continue to deploy features without affecting your users.

One ideal that is a good target is that your application should be in a position so that product managers or business people can control the launch of a new feature. If this is available it means your business folks will be able to plan more amazing launch events and plan marketing around the new feature or service.

Best Practice - Feature Flags

Feature flags is a methodology of how to manage and control the launch of new features or functionality to your apps. The idea is that each new feature that you build should be built behind a gate or flag. In real code this often take the form of an if statement where when the flag is enabled you are using the new functionality, otherwise it's using the existing functionality.

def some_functionality(params):

if some_flag:

return new_functionality(params)

return old_functionality(params)This gives you a level of control over how your application behaves without needing to re-deploy your application. Once feature flags are in place, the next part is how to manage the rollout of this feature.

The rollout needs to be managed because although the code may function correctly, there are business factors that might be impacted by the rollout. This is post-deployment - when the feature goes live. An example is that you might deploy a new feature and see checkout volume decline. In this case it's not the code but the user-behavior that is changing.

In these cases you want to be able to rollback the changes & rethink your approach.

Best Practice - Environments, Partitions & Cells

In the world of B2B SaaS it's common to have multiple instances of the same application deployed for different tenants. In this case feature flags and managed configuration is important because you may have the same code deployed for each of the tenants but each tenant may have customizations specific to their deployment.

After a certain scale, when you start to move towards more silo based isolation methods it becomes essential to be able to maintain update frequency of your code - so you can fix bugs and make performance improvements - but not leak functionality between different tenants. If you build a feature for tenant A you want to ensure that only tenant A can access it.

On the scaling side, in some software there is a point where you may start to push against AWS account limits. This is most common in products that require the deployment of backend stacks that comprise of other AWS services. In this case it's common to see partitioning of the production environment into different cells. There are a number of strategies for how you partition:

- Partition based on customer size

- Partition based on customer adoption level

- Partition based on region

When you get to this stage you may have multiple configurations that cater to each cell. To be pragmatic you'd want to deploy to your lest-important customers before you start to deploy to your most critical customers.

Best Practice - Operational Response

The last point to make about configuration management centrally is when it comes to responding to incidents or high traffic. Guidance for this points to disabling non-critical features or adjusting application behavior of the app to allow for recovery of usability.

An example could be to have a configuration to tell the application to write events to disk instead of sending them to a log server that may be having an issue. You may disable certain search capabilities when black Friday or Cyber Monday come around to ensure that you maintain the minimum viable usability of your app.

AWS AppConfig Functionality

There are two modalities for using AWS AppConfig. You can either use it directly via the APIs, SDKs etc. Or you can deploy the AWS AppConfig Agent to manage caching and retrieving the configurations for your applications.

For ECS & EKS deployments the Agent is a better approach because you can deploy it as a side-car & access it via an HTTP endpoint exposed from the public.ecr.aws/aws-appconfig/aws-appconfig-agent:2.x container.

Create an initial configuration in AWS AppConfig

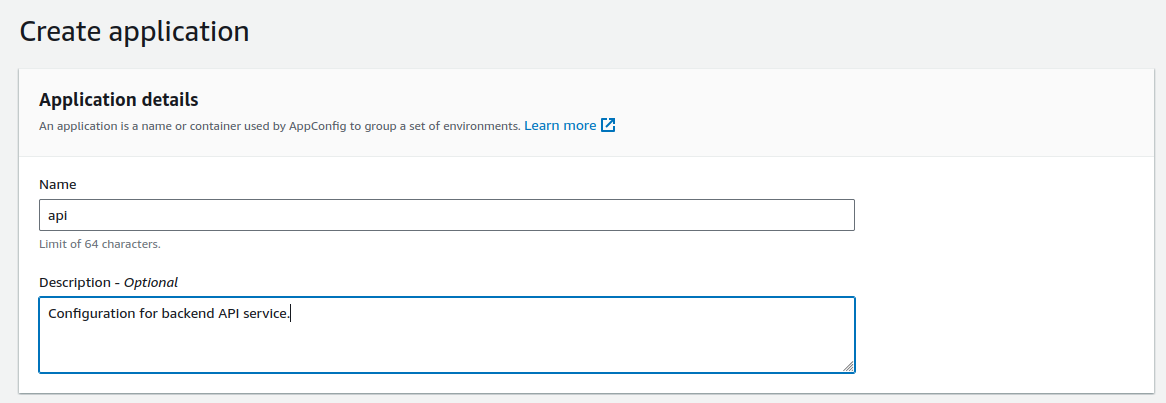

In this simple example, the application being configured is a backend API. The application is created via the console.

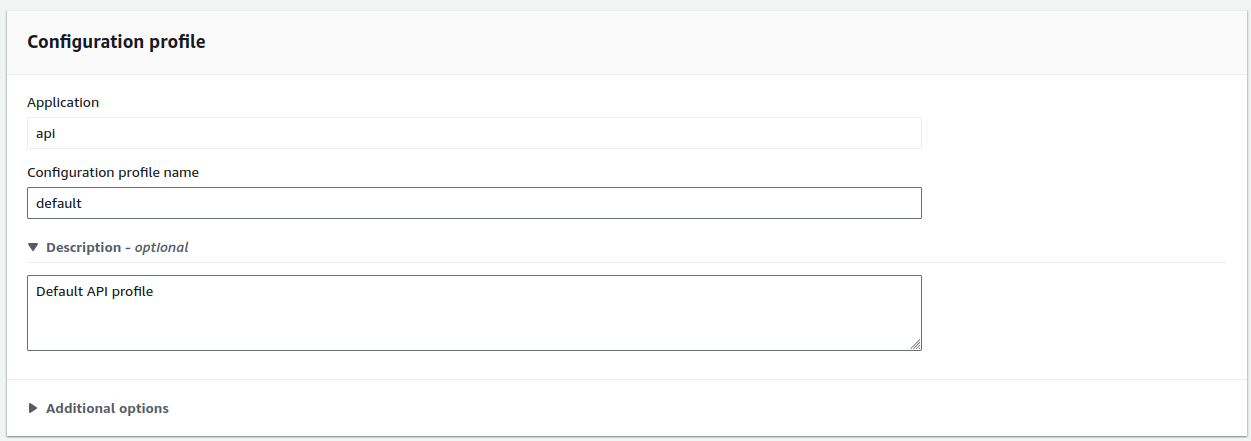

In AppConfig there is a notion of a "profile". In the examples of this article, profile relates to the different types of configurations within the application boundary. For this example, a default profile is created using a "Freeform Configuration". You may decide to have configurations per tenant (e.g. tenant-01, tenant-02) or per tier (e.g. tenant-free, tenant-basic, tenant-pro).

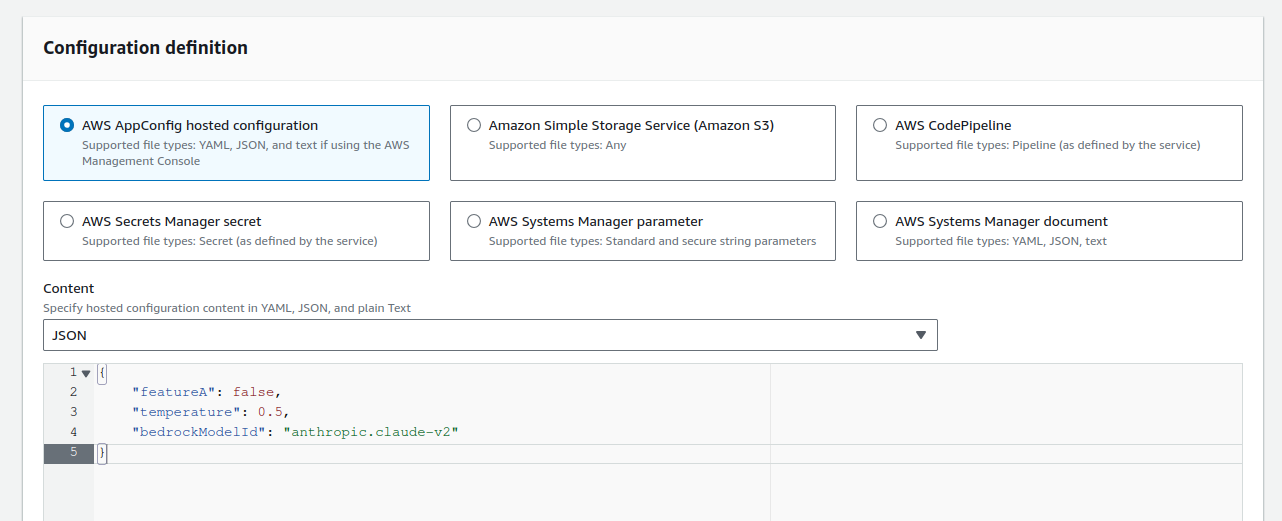

The initial configuration is for a hypothetical API that integrates with AWS Bedrock. We want to centrally manage the configuration of the AWS Bedrock model ID and temperature and perform a gradual rollout of the the config to our API when we update the temperature and the model id.

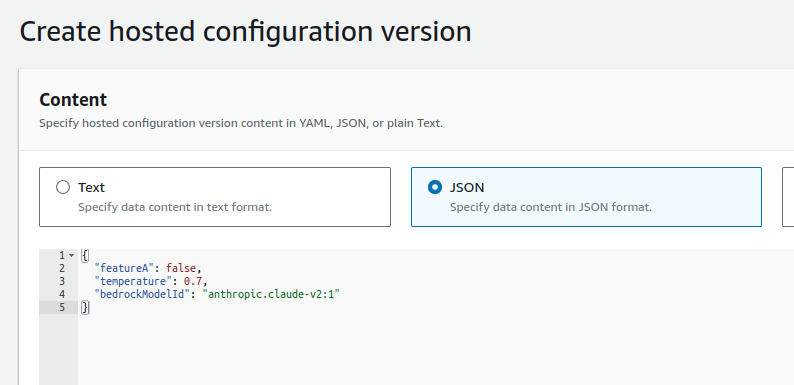

The configuration can be defined in JSON or YAML. In this example, JSON is used. It is possible to provide a validator to ensure that the config is valid before being applied to each environment. For this basic example this has been ignored.

It's also possible to choose where the config is stored. Using tools like S3 or Secrets Manager can help with integration into other parts of your application.

The initial configuration in this example is as follows:

{

"featureA": false,

"temperature": 0.5,

"bedrockModelId": "anthropic.claude-v2"

}

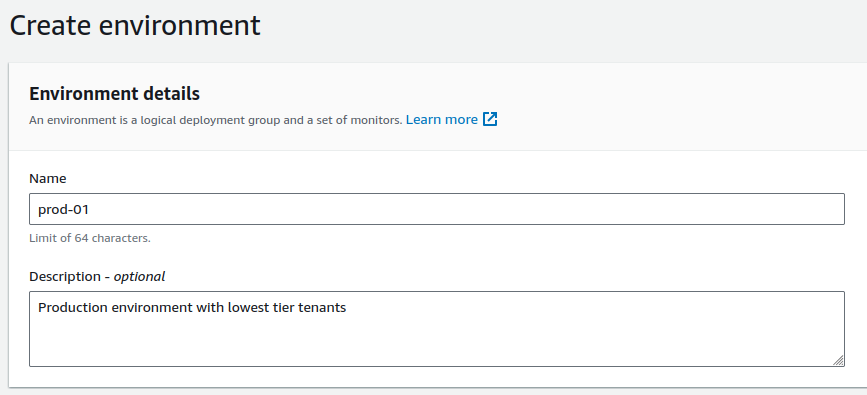

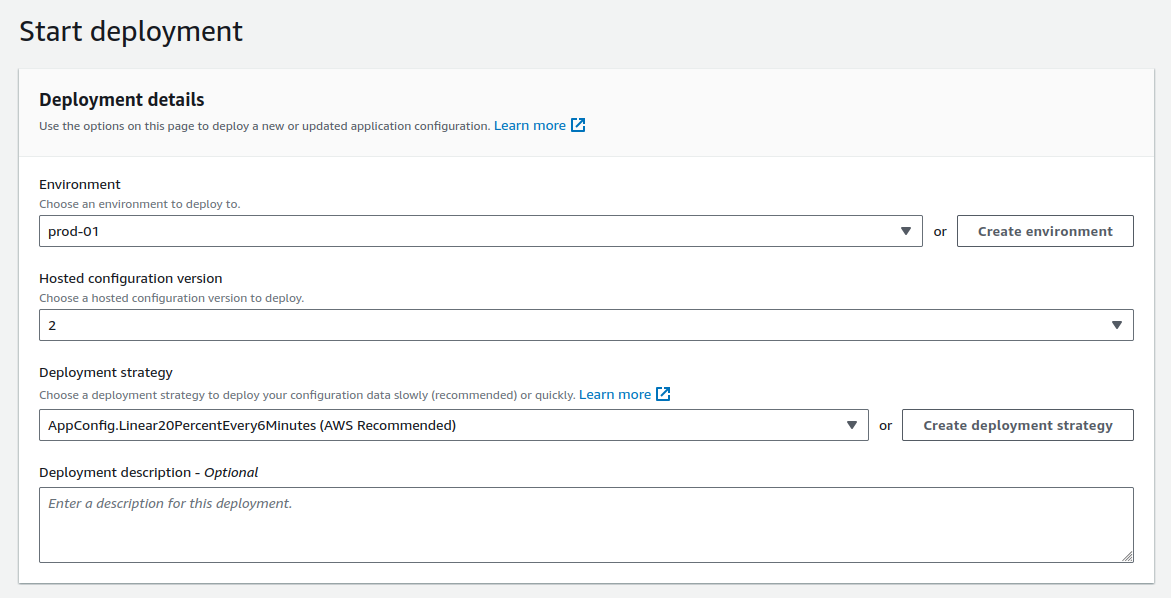

After confirming the configuration, the last step is to define an environment to deploy the configurations. Take a look at the best practices for partitioning above to decide which environments to create. Common environments are dev, staging & prod. If you have a cell-based multi-tenancy setup you may further split prod into prod-01, prod-02 etc to allow gradual rollout within cells.

For this example, an environment called prod-01 is created which contains our lowest tier (non-critical) customers.

Once created, the initial deployment strategy is AppConfig.AllAtOnce to quickly deploy the config before integrating into our application.

The deployment should start immediately and should complete after about 10mins.

Integrate AWS AppConfig with a Python Application

To integrate the configuration, a python class was created. To view the code go to this gist on GitHub. To use the code create an instance of the configuration in one of the following two ways:

# Access AWS AppConfig directly via the APIs

dynamic_config = DynamicConfig(

'api',

'prod-01',

'default',

fetch_type='direct'

)

# Access AWS AppConfig via the AWS AppConfig Agent deployed at http://localhost:2772

dynamic_config = DynamicConfig(

'api',

'prod-01',

'default',

fetch_type='agent',

base_url='http://localhost:2772'

)For serverless or basic environments the direct way is better, for containers environments such as ECS or EKS the AWS AppConfig Agent way is better. Deploying the agent as a side-car is not documented here.

The class is an extension of a dict type so, accessing the configuration is simple:

temperature = dynamic_config['temperature']

bedrock_model_id = dynamic_config['bedrockModelId']In the background the DynamicConfig class will handle basic caching of the response if the direct method is used. If the agent mechanism is use then caching is managed by the agent.

Updating the Application Configuration

Once the application is deployed with the above DynamicConfig integrated, then to gradually deploy an update, a new configuration is required.

From the AWS AppConfig console, navigate to the default configuration. Create a new configuration version. In this example the temperature is updated to 0.7 and the AWS Bedrock model ID is updated to use Claude 2.1.

With the version created, the "Start Deployment" option becomes available. Select the prod-01 environment. In this case AppConfig.Linear20PercentEvery6Minutes deployment strategy is used. Which will gradually roll out the config changes to the different application instances.

Choose "Start Deployment" will begin the process of deploying the updated configuration.

Extra Steps

Extra steps you could take to improve the deployment process is to add Monitors to the deployment environment to react if a CloudWatch metric becomes unhealthy. If this happens, AWS AppConfig will revert the change to the previous version of the configuration.

Multiple Environments could be configured and updated as part of a AWS CodePipeline Pipeline. For each different stage you could update the config and trigger the rollout via an AWS Lambda function or step function. This would allow you to procedurally deploy configuration changes to dev -> staging -> prod-01 -> prod-02 etc in waves.

Conclusion

This article went into some details and best practices for deploying application configuration gradually at scale. It also provided a basic example of how to integrate AWS AppConfig into a Python application.

Our team helps businesses and startups build and scale their infrastructure using cloud technologies. If you're looking for Cost Optimization on your existing workloads or need an Architecture Review of a proposed workload we are here to guide assist.

You can contact us for a more info.